Code Review Guidelines ✅

What Authors and Reviewers Should Actually Do? (6 min)

Code review is a process that allows others to review your code changes.

The person who submits the code changes is referred to as the “Author”, while those reviewing the changes are referred to as “Reviewers”.

The primary purpose of code reviews is to:

Enhance the quality of the released solution and improve collaboration and knowledge transfer within the team.

However, doing code reviews right is not an easy task.

There are many problems related to code reviews, like wasted human effort and time, wrong focus → wrong results, communication breakdown, criticism, decreased developer productivity, and team velocity.

In today’s article, I’d like to share several code review guidelines, both for Authors and Reviewers, that I've seen working in teams and companies.

Let’s dive in!

The Hidden Cost of Broken Code Reviews

Reviews Become Time Sinks

The average PR takes 2-4 days from submission to merge.

Teams spend 8-12 hours per week on code reviews instead of building features.

Wrong Focus → Wrong Results

Developers spend 50% of review time on formatting, naming conventions, and style preferences.

Meanwhile, logic bugs, security vulnerabilities, and performance issues slide past because reviewers are mentally exhausted from nitpicking.

Communication Breakdown

Authors write vague PR descriptions.

Reviewers give unclear feedback and/or criticize the author.

Nobody knows if an issue is critical or optional.

Simple fixes turn into multi-day discussions.

The cost isn’t just velocity—it’s team morale, code quality, and customer trust when bugs reach production.

Code reviews are expensive since there are many people involved in this activity.

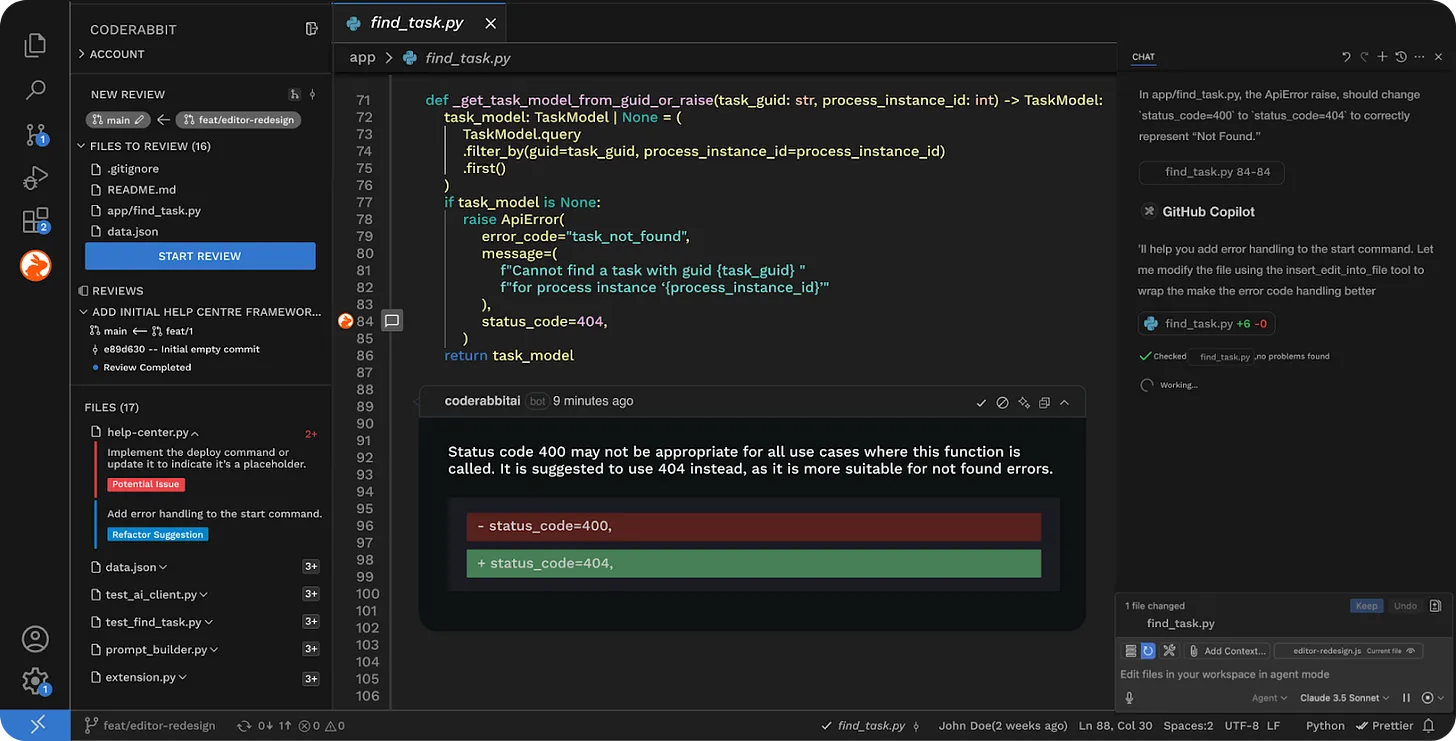

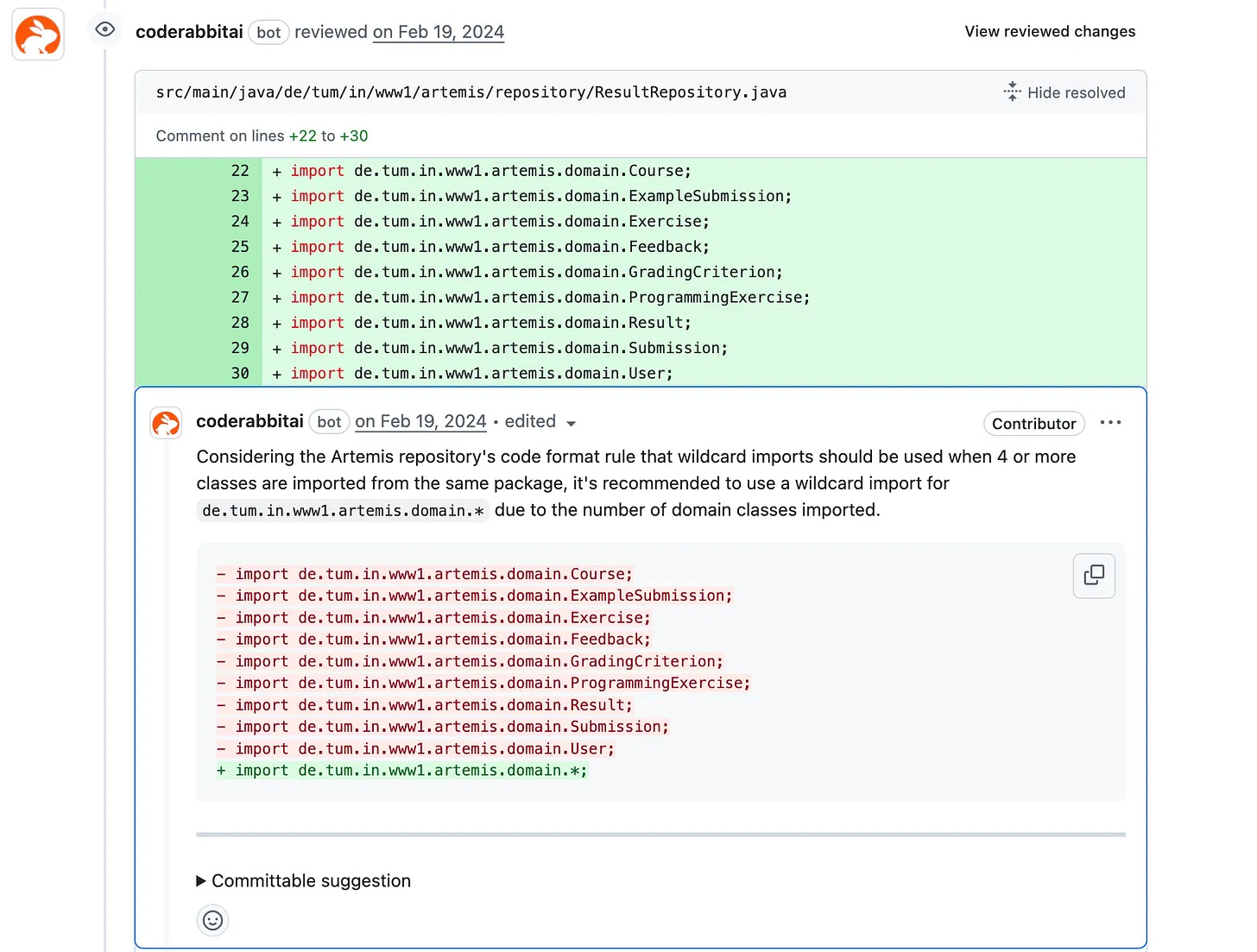

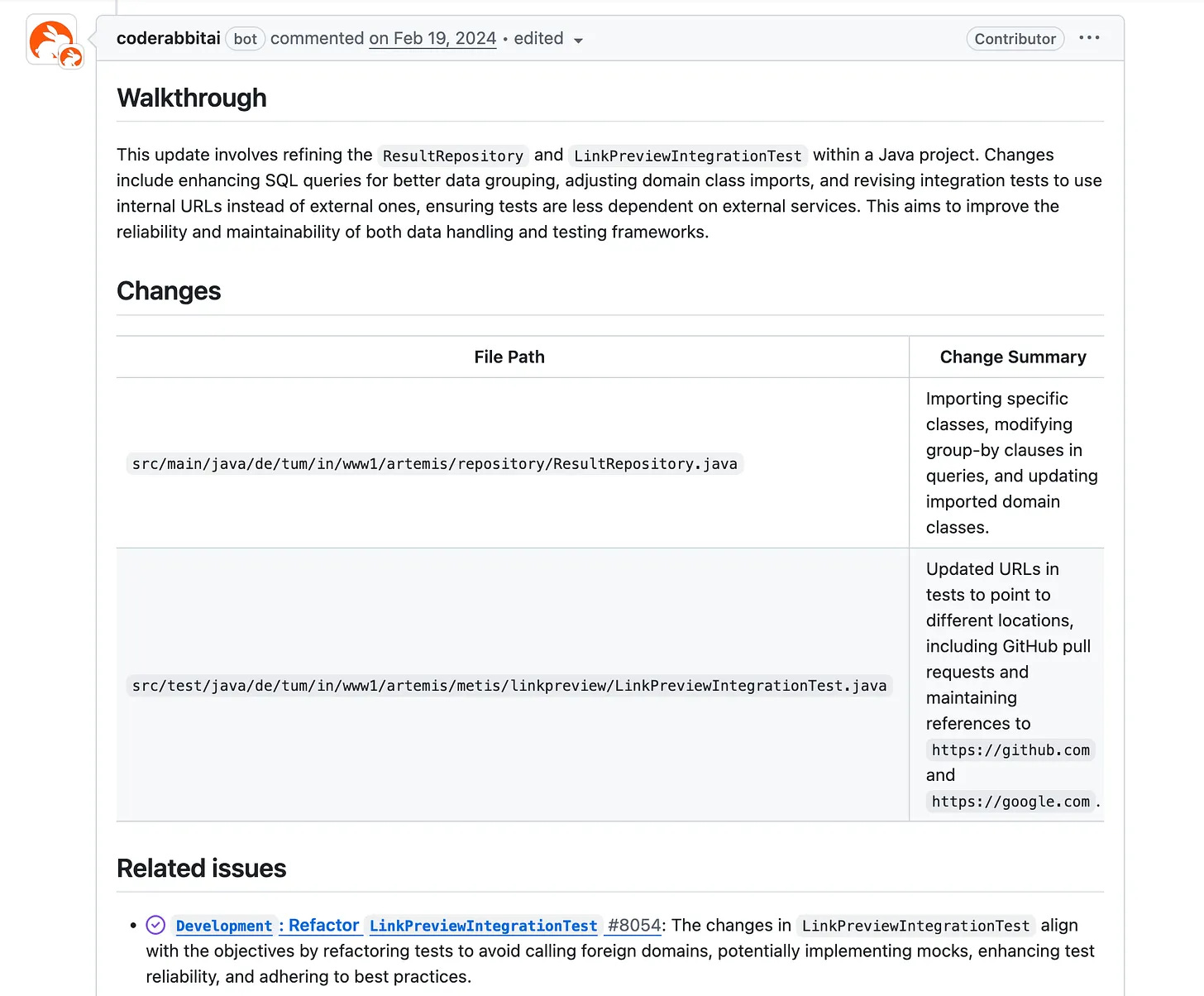

That’s why I’m happy to partner with CodeRabbit on this newsletter.

With the help of CodeRabbit, I believe we can make them cheaper by automating the process, fixing the obvious problems, and focusing on the right things.

Best Practices for Authors:

Review your own code changes and PR first before sharing with others.

Remove debug statements and commented code.

Check for obvious bugs or typos.

Consider using CodeRabbit for giving instant feedback.

Ensure consistent naming and formatting.

Especially if your company follows a style guide.

Test your code changes.

Both manually and with automated tests.

Know what each new line does.

This is very crucial in the era of vibe coding.

Automate the easy stuff.

Add formatters, linters, and rules to prevent nitpicking.

Consider using CodeRabbit for finding potential issues.

Keep your code changes and PR small.

Research shows that review quality drops dramatically with the increasing number of files, lines, and changes. Ex: 400+ lines.

If your feature needs more code, break it into logical steps that can be reviewed independently.

Write a clear PR description. Try to answer the questions:

What - “Add rate limiting to login endpoint...”

Why - “Prevent brute force attacks…”

How - “Implement rate limiting algorithms, block IPs…”

Testing - “Tested with 100 concurrent requests and verified…”

For visual changes, include relevant screenshots and/or videos that showcase the changes.

For bug fixes, outline the Before and After behaviors, and how you fixed them.

Consider using CodeRabbit for generating a summary of the changes.

Document the non-obvious changes.

Try to answer questions with the code itself.

Respond graciously to critiques.

Remember, it’s not about you, it’s about the code.

If you’re confused, ask clarifying questions.

Don’t guess, ask questions instead.

Ex: "When you say 'this could be cleaner, ' do you mean splitting this function or renaming the variables?"

Be patient when your reviewer is wrong.

Reviewers might be wrong due to a lack of context or experience, so try to provide it and help them understand your perspective.

Minimize the lag between rounds of review.

Acknowledge feedback within 4 hours, even if you can’t fix everything immediately.

Quick responses show respect for your reviewer’s time.

Consider doing a pair programming session with the reviewer if needed.

Peer code reviews work as a two-way transfer of knowledge when conducted carefully.

Both the reviewer and the author can learn new things, either for the project or in general.

Best Practices for Reviewers:

Review PRs within 24 hours of submission.

This not only unblocks the author to merge their code but also speeds up the development process.

Prioritize your review using this framework:

Correctness: Does the code work as intended?

Organization: Is it well-structured, maintainable, and follows the style guides?

Readability: Can the team and future me understand and modify this later?

Reliability: Is the solution fault-tolerant?

Scalability: Can the solution grow to accommodate higher future load?

Edge cases: What could go wrong in production?

Prioritize your feedback:

Critical (must fix) - logic errors and bugs; security vulnerabilities; breaking changes; performance bottlenecks.

Important (should fix) - poor code organization; missing error handling; inadequate tests.

Suggestions (optional) - alternative implementations; style improvements; minor optimizations.

Don’t make it personal! Review the code, not the author.

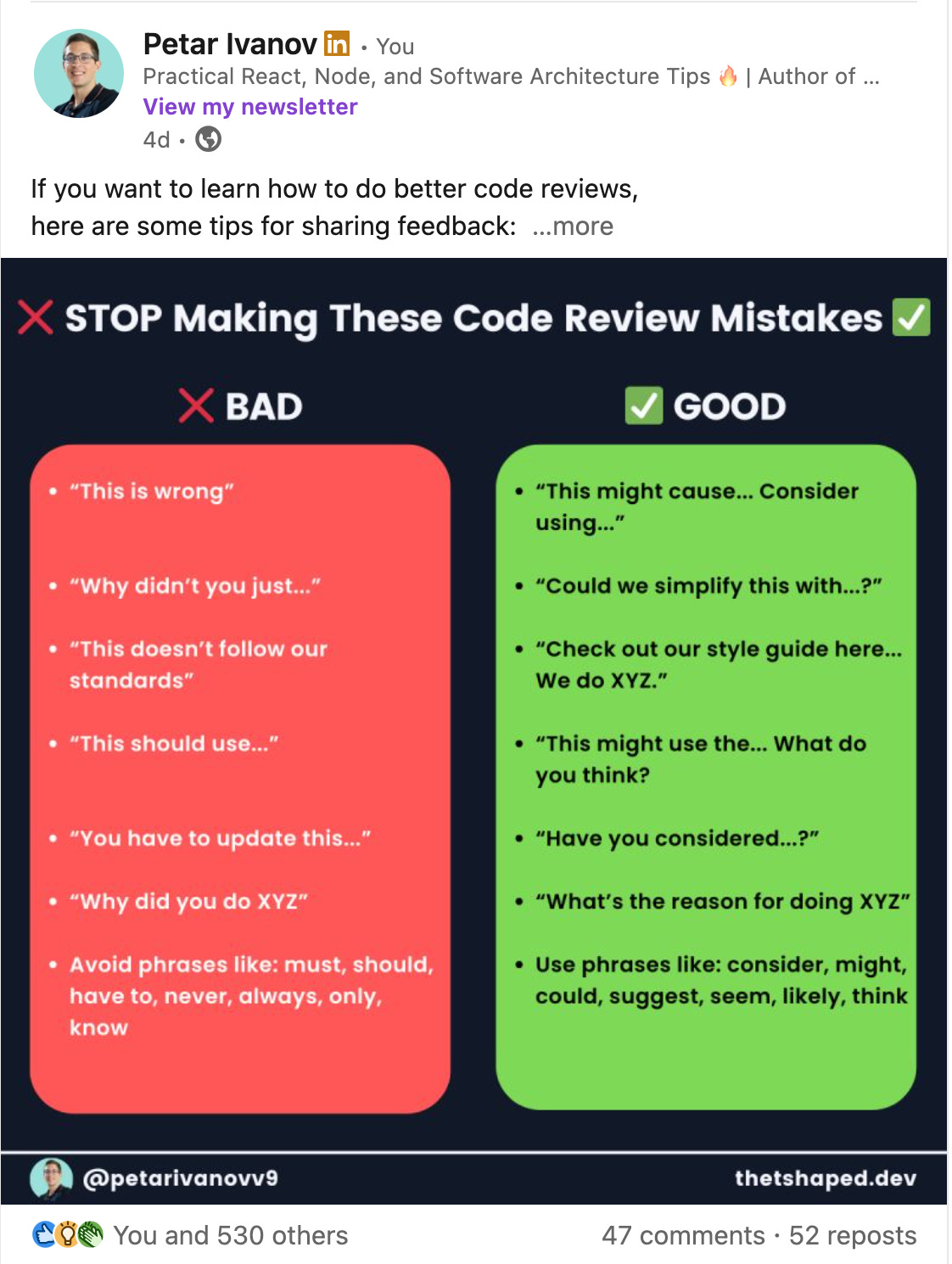

Learn to give better feedback. Ask, don’t tell!

When you add a comment, have a strong WHY and a suggestion.

Mark more comments as NIT and don’t block PRs.

Praise the good things.

Engineers, and people in general, tend to criticize when doing any kind of review, while forgetting to mention any good.

For the reviewer to be able to learn from the author, he should pay attention to the clever code as well, and it is a good habit to notice it.

Consider doing a pair programming session with the reviewer if needed.

Sometimes, it’s better to sit together and resolve all issues instead of going through multiple rounds of reviews.

When critical issues are resolved and code is “good enough” → Approve it.

Code review is part of your job.

If you're not doing code reviews regularly, you are letting your teammates down.

CodeRabbit is an AI code review tool to reduce developer workload.

Here’s how it helps with code review flow:

Do routine checks and reduce the time spent on reviews from days to minutes. Thus, improving developer velocity.

Do consistent reviews without getting tired or biased. Thus, it reduces the risk of missing important issues due to human mistakes.

Provide mentorship to junior engineers through detailed feedback.

Put simply, CodeRabbit complements human reviewers. It serves 1+ million repositories and has reviewed over 10 million pull requests. And it remains the most installed app on GitHub and GitLab.

📌 TL;DR

Review your own code changes and PR first.

Ensure consistent naming and formatting.

Test your code changes.

Know what each new line does.

Automate the easy stuff.

Keep your code changes and PR small.

Write a clear PR description.

Document the non-obvious changes.

Respond graciously to critiques.

If you’re confused, ask clarifying questions.

Be patient when your reviewer is wrong.

Minimize the lag between rounds of review.

Review PRs within 24 hours of submission.

Prioritize your review using a framework and quality attributes.

Prioritize your feedback.

Don’t make it personal! Review the code, not the author.

Learn to give better feedback. Ask, don’t tell!

Praise the good things.

When critical issues are resolved and code is “good enough” → Approve it.

Core reviews are part of your job.

Hope this was helpful.

See you next week!

Today’s action step: Take a look at your code review process and highlight areas for improvement. Try to follow or incorporate one of the mentioned tips in your daily routine.

👋 Let’s connect

You can find me on LinkedIn, Twitter(X), Bluesky, or Threads.

I share daily practical tips to level up your skills and become a better engineer.

Thank you for being a great supporter, reader, and for your help in growing to 27.5K+ subscribers this week 🙏